Years after the debut of Google Glass, Apple is likely to show us its version later this year. But what exactly will they do?

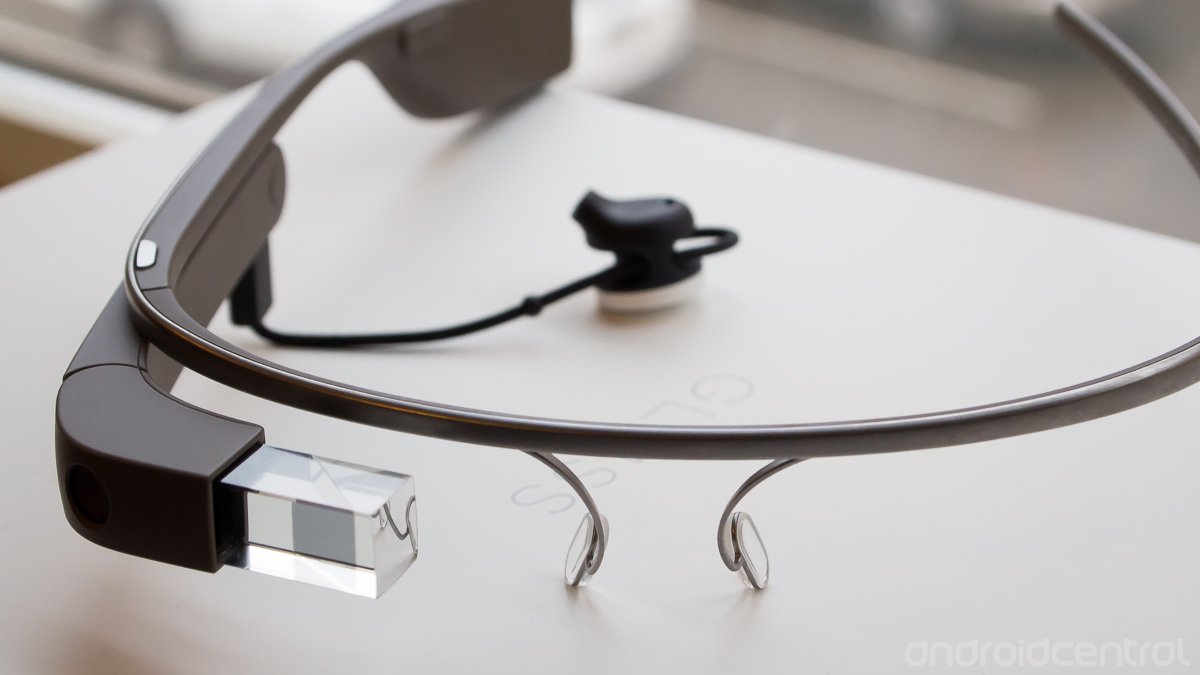

Smart glasses are not only a thing from the movies that you can't wear at the theater — they actually exist. Google debuted its Google Glass Explorer program in 2012 as an experimental product that you had to sign up for a chance to buy, and since we've seen smart glasses from companies ranging from Panasonic to North to Snapchat. But despite that proliferation, it seems that very few people want them or even really care about them.

So why, eight years after Google Glass, is Apple releasing its own smart glasses? And we know they are: code for the product was accidentally included in an official developer tools release, and YouTuber John Prosser has a load of details on exactly what to expect and when. Apple really is doing this.

But Apple is doing it a bit differently than we've seen before. That's the benefit of watching eight years of smart glasses evolution. According to Prosser and his information — which he freely admits is based on a prototype device — the price will start at $499, there will be no camera, all the work is done on the phone, and Apple Glass won't work with sunglass lenses. Other than the price, all of these things feel like drawbacks. But maybe not.

Apple Glass has been the worst kept secret for years, but know we pretty much know everything.

Apple isn't rehashing Google Glass and the two companies have a very different vision of what "smart glasses" are. Google wanted to deliver contextual content like notifications of incoming calls as well as do more ambitious things like displaying a live view of turn-by-turn navigation while you were driving. The display was just a tiny prism lens, but it acted more like an Android smartphone than just an accessory —- Google Glass was actually a smart device on its own.

Apple is basically delivering a second display which will probably have very restrictive rules about what can be shown on it. Notifications will surely be there, and I expect you'll be able to answer calls or have Siri send a text through Apple Glass. But I don't think you'll see a live map when you are using Apple Maps, for example. Apple is going all-in with AR, and Apple Glass makes for a perfect way to do it. The lack of a camera will be a limiting factor for "fun" AR apps, but remember this is version one. If you had to use the original iPhone today you'd end up smashing it against the wall.

While Google Glass was a contextual computer you wore on your face, Apple Glass is going to be an awesome way to deliver AR content. It's hard to compare the two.

We need to compare Apple Glass to the Apple Watch, not Google Glass. Google Glass was never a consumer product, still exists as an enterprise tool, and was an actual wearable computer that you strapped to your face. The idea was cool, but the execution was definitely not. Glass' biggest hurdle and the thing that probably showed Google releasing it to the public was a bad idea, was the false invasion of privacy that other people felt if you were wearing them.

Never mind that every smartphone has a camera. Never mind that there are security cameras with facial recognition built-in all over the place. Never mind that Google Glass' prism glowed like a jack-o-lantern if you used the camera. People still freaked out because Glass had a camera. Apple is getting around this privacy hurdle by not putting a camera in Apple Glass.

Instead, there is a LiDAR sensor. The 2020 iPad Pro ships with a LiDAR sensor, and leaks suggest that the iPhone 12 will also house one. LiDAR is very useful in mapping what the sensor and its companion camera can see, but I'm not exactly sure how it can work on its own. But I don't need to know, because Apple Glass does all processing on the iPhone so an app like Apple Maps can send data about your location to the smart display and show you something useful, like information about a linen sale if you're at Target — or something cool, like playing a video while you're at the Lincoln Memorial.

More: The iPad Pro now has LiDAR, but Google could do it bigger and better

What LiDAR can do is track motion, so you could, in theory anyway, control things using gestures. Things like dismissing notifications or Pixel 4-style audio controls are entirely possible using LiDAR. LiDAR is really cool even if it's not flashy. It's a tool.

Wearables all seem to underwhelm at first and Apple Glass probably won't be an exception.

The first Apple Watch launched without the ability to do anything on its own, and instead relied on an iPhone for any processing. It was a remote display and there was a bit of criticism about how useful it was. Apple has since done an outstanding job creating a tiny Mac in a box to put inside the Apple Watch and the people who own one love it and find it useful every day.

That's what to expect from Apple Glass. The first version will be a bit underwhelming and lean heavily on the Apple name to keep the project afloat while improvements are made. As long as the integration with AR applications on the iPhone is done right, that won't be difficult for Apple to work around. AR can be fun, but it also can be useful. When done on a pair of glasses, it could be more fun and more useful.

One thing we know Apple Glass will never do is work with your Android phone. For all the cool things that could be possible with a pair of smart displays directly in front of your eyeballs, knowing that for most of us none of them matter is a bit of a bummer. Apple will sell millions of them, and since they aren't able to work with tinted lenses you'll see people with an iPhone and Apple Glass using regular glass lenses without a prescription because there's an Apple logo on the box. Apple doesn't need Android users and until it does, this won't change.

from Android Central - Android Forums, News, Reviews, Help and Android Wallpapers https://ift.tt/2M1kIOl

via IFTTT

No comments:

Post a Comment