There was no Facebook data breach. You gave them the data, and they gave it away — all according to plan.

News reports have been swirling about Facebook (a company you know), Cambridge Analytica (a company you might not have heard of), and the 2016 United States presidential election. It's an important story, but I've observed a critical misunderstanding or miscasting of the discussion in many media outlets, even those that are supposed to be tech-savvy. You've maybe seen this story described as a "breach" or a "leak."

The reality is far more distressing: Facebook basically gave away our profile data. The company has always made all of this data available, it just never expected it to be used like this.

Facebook, Cambridge Analytica, and what happened

Cambridge Analytica is a data mining and analysis firm that specializes in delivering, to quote their mission statement, "Data-Driven Behavioral Change by understanding what motivates the individual and engaging with target audiences in ways that move them to action."

Which is to say, it uses profile data to tailor messaging and advertisements. This isn't a new concept — magazine, TV, and radio ads have long been customized to subscriber demographics. What's new is the breadth, depth, and precision of the targeting. The nature of the internet means that a huge amount of data about you is available for the taking, and you've given it all away.

Cambridge Analytica worked with the 2016 presidential campaign of Donald Trump, using the data of 50 million Facebook users to target advertisements at voters that they believed to be receptive to the campaign's message. It was an effort unprecedented in politics, and how much it affected the vote is unanswerable. But there's little doubt there was an effect. But how did Cambridge Analytica get that much data?

How did the Trump campaign's digital operation get its hands on 50 million user profiles? Facebook basically gave away your info.

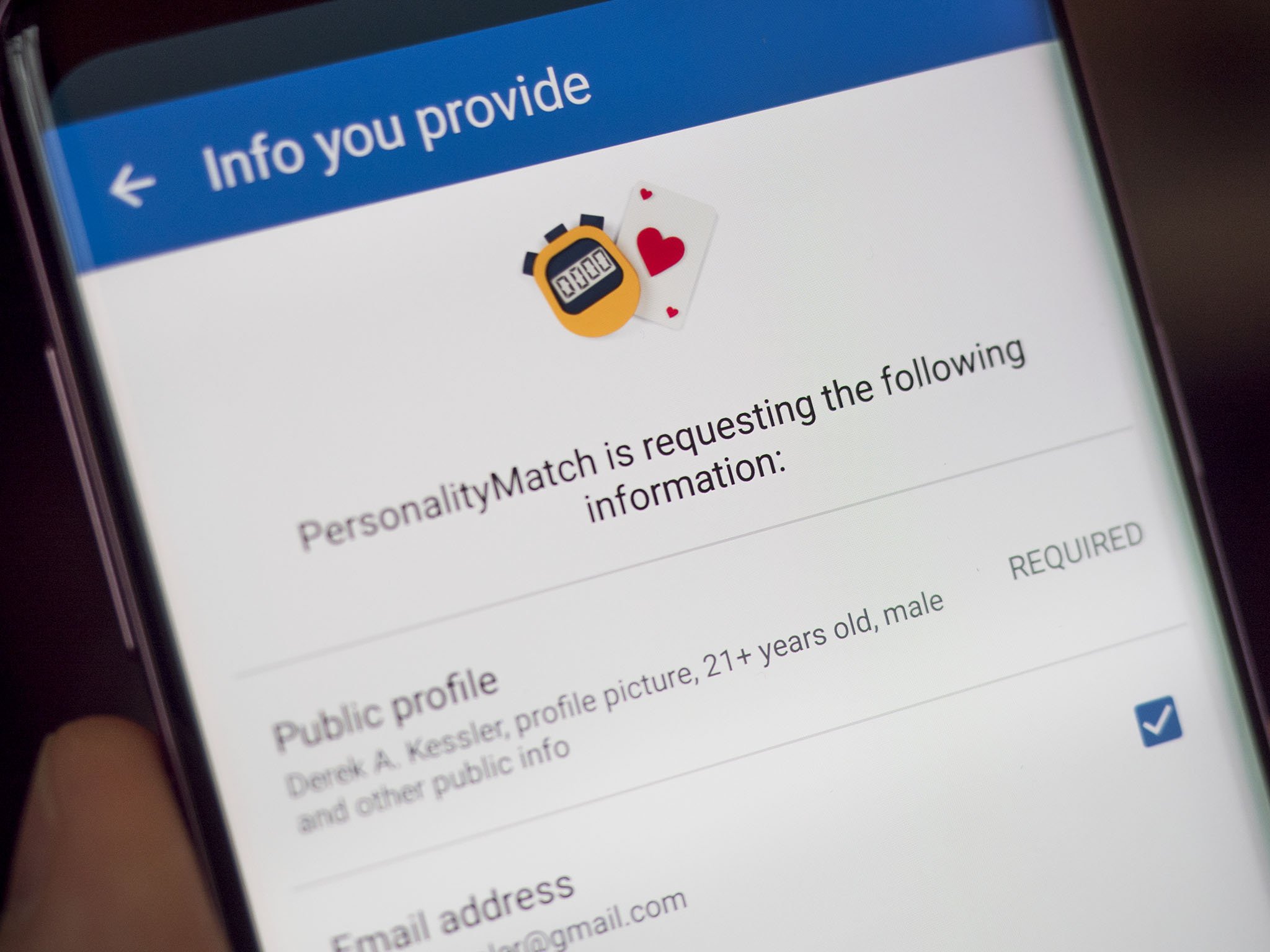

According to some excellent reporting by The New York Times, Cambridge Analytica built a personality survey app that required a Facebook log-in. That app was distributed by a compliant Cambridge University professor, who claimed the data would be used for research. This was entirely legal and in accordance with Facebook's policies and the profile settings of its users. That the data was passed from the professor to Cambridge Analytica was a mere violation of Facebook's developer agreement.

Around 270,000 Facebook users reportedly downloaded the survey app. So how did Cambridge Analytica harvest the data of some 50 million users? Because they were Facebook friends of people who downloaded the app.

How this happened

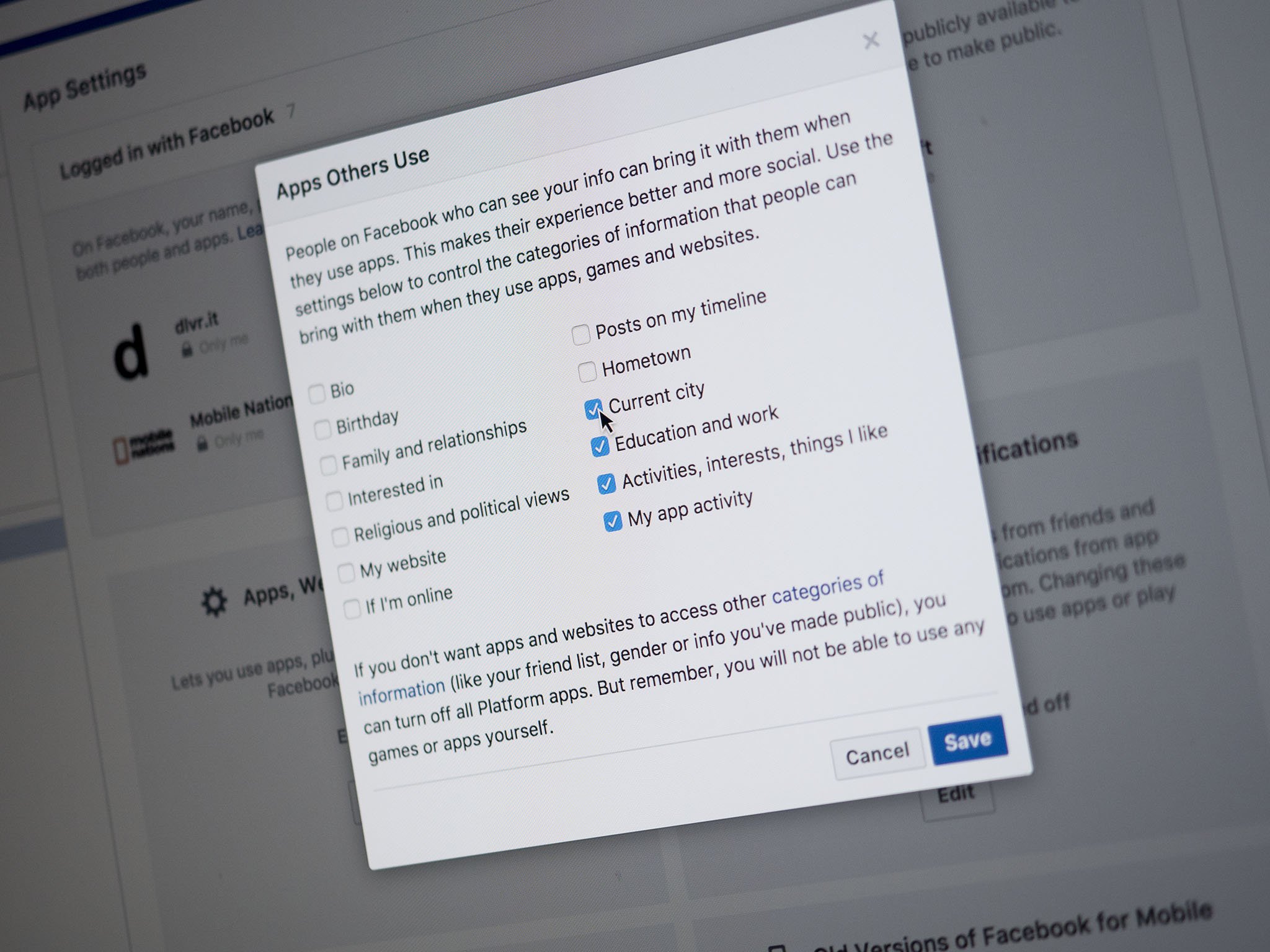

Facebook's policies and default privacy settings allow apps to collect massive amounts of profile data. That information is supposed to be used to provide you with a customized product; in reality, it's usually tailored advertisements. The most painful part is that we users opened the door to these apps — the user has to download the app and grant it permission to access their Facebook profile. It tells you right up front what data it wants access to.

Taking the survey required allowing access to your Facebook profile. Thanks to Facebook's default privacy settings (which only a small portion of users have changed) the survey app also pulled in the profile data of millions of Facebook friends. All of this data was forwarded to Cambridge Analytica, which rolled it up with data from other sources to build psychological profiles of potential voters.

Facebook is a business, but that business is not being a social network — the business is advertising.

Facebook says it cares about your privacy, but that's lip service. The company wants you to be just comfortable enough that you keep sharing. Facebook is a business, but that business is not being a social network — the business is advertising. The free social network that most Facebook users use is a conduit for collecting data and distributing ads. Facebook was designed to get you to hand over as much information and spend as much time on it as possible, all in order to deliver more and better-targeted ads.

How we got here

Years ago we, as a collective of internet users, made a grand bargain. Given the choice between paying for a subscription service or getting a service for free and dealing with ads, we chose free with ads. Except we paid with our data and we had no concept of its value. Facebook, Google, and others are all designed to gather more and more data, and they've become more and more effective at synthesizing that data and precisely targeting users. Google's free product is an incredible search engine, but the company logs all of those searches to build a profile of you and sell ads against that profile.

This is true of most companies built on a free service, including Facebook, Google, Twitter, Spotify, even free tax preparation services. The real customer is whoever is buying your data or buying advertising slots based on your data.

If you're not paying for the product, then you are the real product.

That's just how the modern web works. What we've failed to grasp are the scope of all that data and its potential. But the people collecting it certainly did. They were playing a long game and they made it fun for users. We were happy to fill out our profiles, delighted to post about our interests, comfortable handing over our files, and just fine with logging our searches.

You know the phrase "knowledge is power"? In the twenty-first century, data is power, and whoever controls it writes the rules.

Consequences and the presidential election

None of this excuses Facebook or Cambridge Analytica. That your data was readily available for exporting and exploiting — via your friends — should both appall and infuriate you. But this was not a breach or a leak; it was an exploitation of Facebook's own tools and rules.

Facebook and Cambridge Analytica will be hauled in front of Congressional committees for testimony. But what happened was not against any laws, and it's not clear if there will be any consequences beyond revoking Cambridge Analytica's access to new Facebook user data. (Facebook requested the data be deleted, but it has no way to enforce that request.)

No laws were broken; it's not clear if there will be consequences. But it was grossly negligent.

Your seemingly innocent and private profiles, musings, likes, and shares were all mined and assembled into a profile of how best to exploit your beliefs, fears, and hopes during the last election. It's disconcerting when this information is used for advertising; it's terrifying when that same data is used to sway the electorate.

Trump did not run a sophisticated traditional campaign. His traditional "ground game" was incredibly lacking, but he made up for it with loud media savvy (either by accident or by design) and a quiet and unprecedented online campaign that understood the power of your data better than any in the past. And now Donald Trump is President of the United States.

Data. Is. Power.

So what now?

This was the natural next evolution of the web we implicitly agreed to without understanding the trade-offs. Users and companies have reaped rewards from this data, but this level of abuse was only a matter of time.

Our society is built on trust, and when that trust fails we make laws. We trusted Facebook and the company gave away our data with an unenforceable developer agreement as the only safeguard. Facebook isn't alone — every company wants your data, and you should be reticent to trust any of them. It doesn't matter what company we're talking about — Google, Uber, Apple, Amazon, Microsoft, Tesla, Spotify, et al — they all want your data. Some are more judicious in how they handle it, but even if they're not selling your data they will use it to sell to you.

I won't tell you to delete your Facebook account, but I also won't stop you. Nobody has to have a Facebook account. If you want to keep using Facebook, review your privacy settings, your profile information, which apps you've authorized, and even what you're posting and liking.

Don't trust Facebook or any other company with data you wouldn't give to a complete stranger. Don't log in to apps or services with your Facebook profile — and if they offer no alternative, use something else. Don't take random Facebook quizzes. Think twice before posting any personal information online. We all need to be cognizant of the data we're giving out.

That's the short game. In the longer term, we need systems in place to protect everyone. Silicon Valley is not going to fix this problem; its leaders are too naive about the nature of the humans to realize it even is a problem. We have laws and regulations governing airplanes, pharmaceuticals, construction, shipping, and everything else under the sun. I'm not normally one to advocate for more regulation, but it's clear that today's laws were not written for the modern internet.

Silicon Valley is too naive to even realize this is a problem, let alone fix it on their own.

Digital companies will claim that current laws and regulations are enough and that new ones will limit innovation. New regulation will indeed increase costs, but as long as there is money to be made investments will not stop. Regulation didn't stop innovation in the automotive or aerospace industries, and it certainly won't bring tech innovation to a halt. Some coalition of tech companies will issue an "Internet Bill of Rights" or such and say its principles will be sufficient to protect users. We've seen such pledges before But anything short of federal law will be insufficient. The tech sector accounts for nearly one-tenth of the U.S. economy and is growing rapidly; it's in everybody's best interest for it to be sensibly regulated.

It's well past time that we demand tech companies act responsibly with our data. The internet of today and the hyper-customized AI services of tomorrow only work if we can trust them to respect and safeguard our data. We users need to get a better handle on what we're putting out there for free, what's being done with our data, and what we expect from the Facebooks, Googles, Amazons, and Apples of the world.

Either through negligence or malevolence, our implicit trust in these companies was misplaced. We need trust for all of this to work, and the only way for that trust to be restored is through concrete action and enforceable regulations.

from Android Central - Android Forums, News, Reviews, Help and Android Wallpapers http://ift.tt/2IEXM4z

via IFTTT

No comments:

Post a Comment